LiDAR vs. Camera Only – What Is the Best Sensor Suite Combination for Full Autonomous Driving?

The autonomous driving industry has been exploring different combinations of sensors to support the development of full self-driving system. In this article we will examine and compare different approaches to sensor suite set up.

Mainstream sensor combination

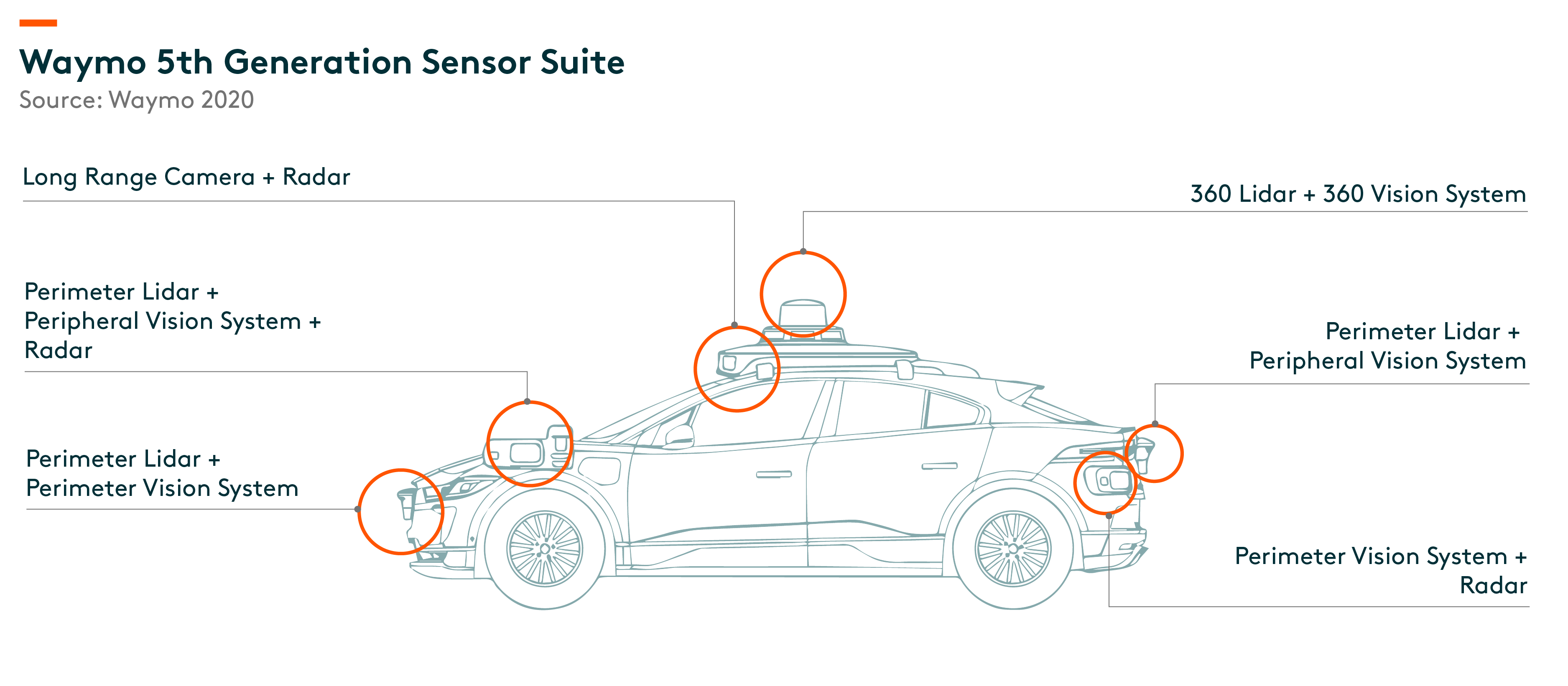

Mainstream sensor suite includes a combination of LiDAR, camera and Radar. Waymo, for example, is one of the leading autonomous driving companies which include LiDAR in its sensor suite. The company launched its 5th generation platform in March 2020, which has an in-house developed 360 LiDAR that provides high-resolution 3D pictures of its surroundings. It has four perimeter LiDAR placed at four points around the sides of the vehicle, offering unparalleled coverage with a wide field of view to detect objects close by. These short-range LiDAR provide enhanced spatial resolution and accuracy to navigate tight gaps in city traffic and cover potential blind spots. There are 29 cameras on board to help with surrounding understanding. The vehicle is also equipped with six radars which can detect objects at greater distances with a wider field of view.

Full autonomous driving with LiDAR:

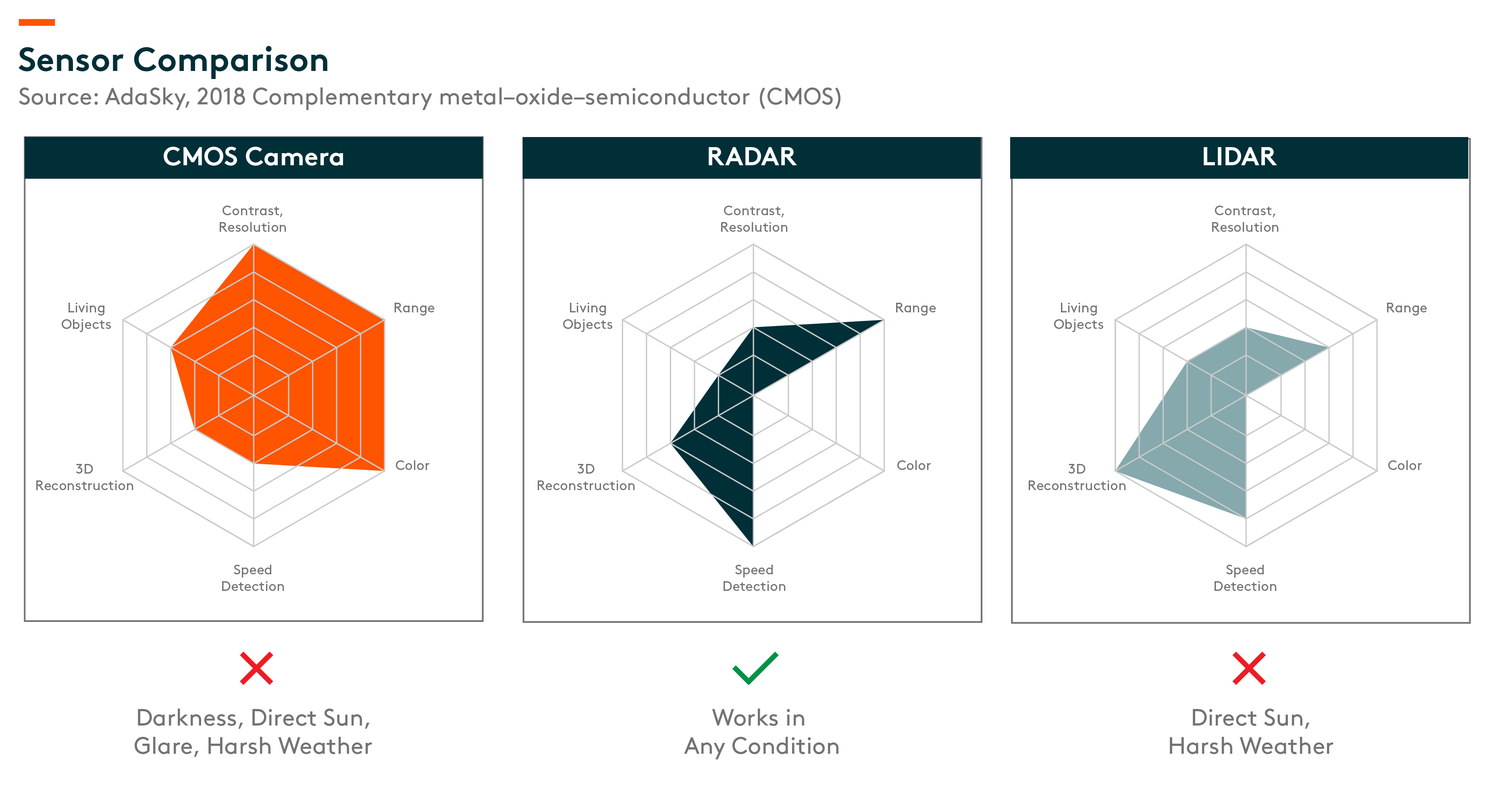

Superior low light performance. LiDAR carries its own light source, and its performance is not impacted under low light situation. Even in complete darkness, LiDAR works perfectly.

More accurate 3D measurement. Human eyes are able to measure distance by triangulating the distance between two eyes and the object. We are also able to recover 3D environment measurements with a camera only setup. However, LiDAR can offer a more accurate annotation of 3D environment, and thus can help boost confidence of the autonomous driving system

Full autonomous driving without LiDAR:

Tesla is one of the leading companies in autonomous driving system development which does not include LiDAR in its sensor suite. The company selects a combination of eight external cameras, one radar, and 12 ultrasonic sensors for the system.(Waymo 2020) Arguments for not having LiDAR include:

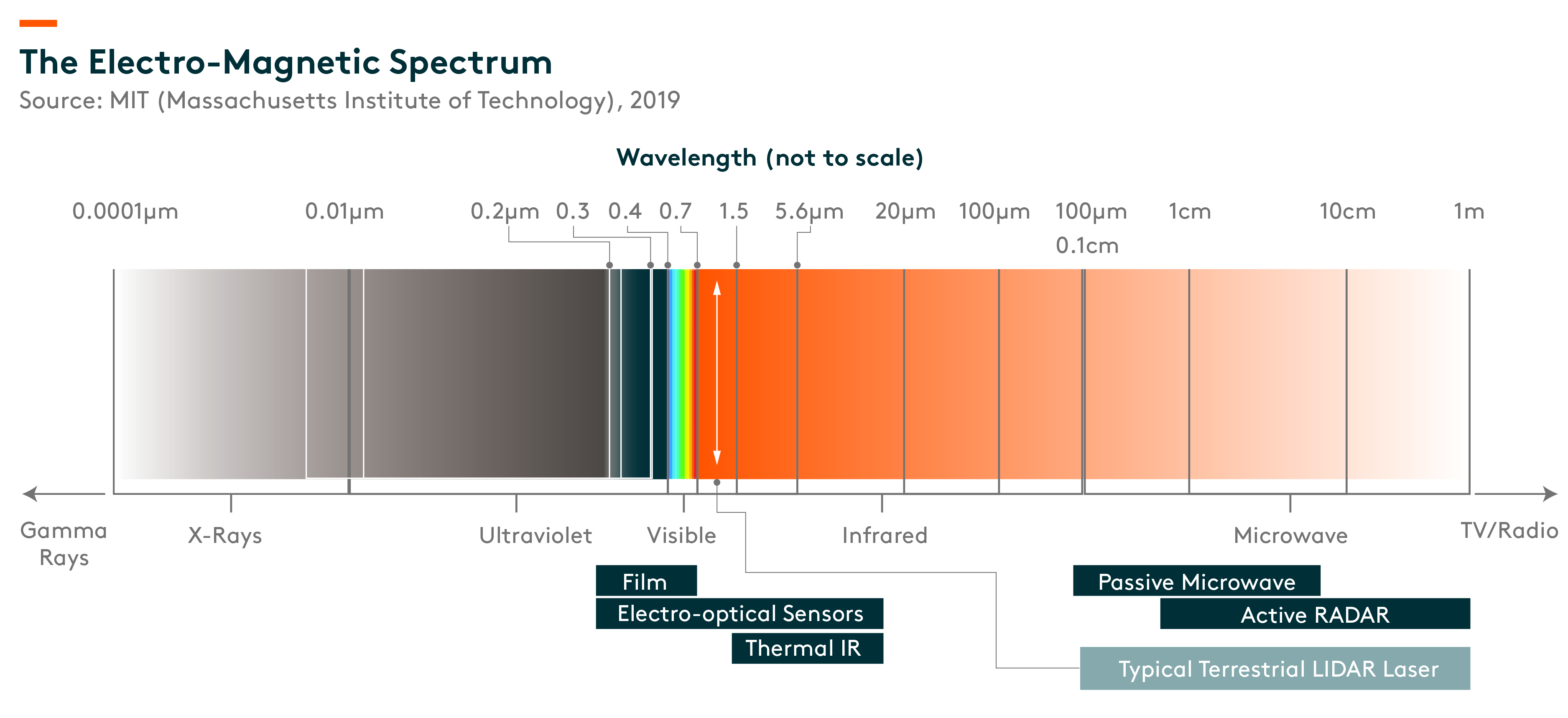

LiDAR faces same penetration handicap as cameras. LiDAR falls between the visible and infrared wave length and is closer to the former, which means its laser bounces around in a similar way as visible light (what the camera is collecting). Under heavy fog/rain situations, LiDAR will also be impacted as laser at that wavelength cannot penetrate fog/rain or anything that blocks viable light, which is similar to what happens to cameras.

LiDAR provides limited information compared to cameras. Modern driving environment is designed for vision-based systems (i.e. human). LiDAR presents a precise 3D measurement of the surroundings without information like colour and text, which as a result is not sufficient to provide a good understanding of the surroundings. Information collected by cameras, on the other hand, contains richer information which can be interpreted by well-trained neutral nets.

Our take:

Companies choose LiDAR as it provides more accurate 3D measurement. From the arguments above we can see that one key problem for full autonomous driving is to retrieve 3D environment data from 2D images. This requires a well-trained machine learning model, which makes it operate as well as humans in understanding driving conditions at all times. Without significant amount of well-annotated quality data, especially data on long tail scenarios, it is impossible to train such models. The problem for most companies, including Waymo, is that they have no access to sufficient real world annotated data at the current volume, and what they choose to do for now is to adopt LiDAR in their sensor suite combination to make up for this shortcoming.

Full autonomous driving may be achieved earlier with LiDAR. Waymo has been operating without safety drivers in Phoenix since 2017,(Waymo 2018) while Tesla only launched its full self-driving beta in the US at the end of 2020.(Tesla 2020) Waymo largely focuses its testing in the US whereas tesla vehicles testings are all over the world. Waymo’s approach with high precision map and LiDAR will enable it to remove safety drivers sooner in areas which are well mapped/trained by the company.

Both combination of sensor will achieve full autonomy. Waymo has reached the end of the road in Phoenix and the remaining challenge is to replicate that in more cities/areas. We think vision ML (Machine learning) models will eventually be mature enough for full autonomous driving. For Tesla, we think it will be able to roll out full autonomous driving at a much faster speed once the model is mature, as the company collects significantly more data from a much more diverse environment all over the world.